Migrating vCenter Server Appliance data partitions to LVM volumes

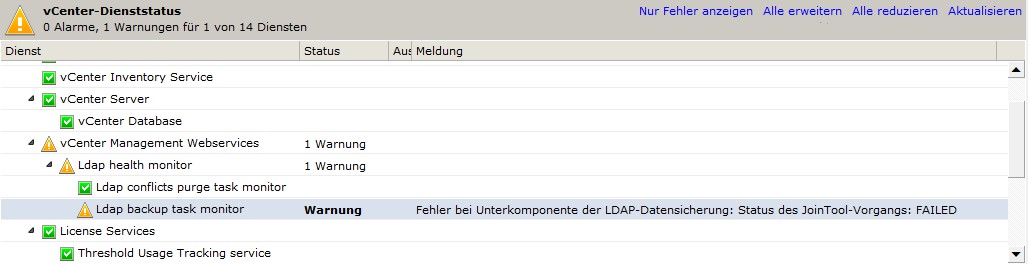

Recently I discovered the following hint in the vCenter service state overview:

Apparently there was a issue with the integrated LDAP service of the vCenter Server Appliance - unfortunately I was not very successful when researching the internet with the appropriate error message:

LDAP data backup subcomponent error: JoinTool operation status: FAILED

A first sight at the system gave a interesting hint regarding the possible fault cause:

1# df -h|grep log

2/dev/sdb2 20G 20G 0 100% /storage/log

Conditional on the centralized collecting of syslogs from all connected ESXi hosts the storage ran out of capacity. The solution was quite simple: cleaning the hard drive solved the issue and the warning in the vCenter disappeared.

Time to strongly fix the primary fault cause: customizing the insufficient partition.

The vCSA partitioning layout

The vCSA consists of two hard drives:

- Hard drive 1 (SCSI 0:0): 25 GB - operating system (SLES 11 SP2)

- Hard drive 2 (SCSI 0:1): 100 GB - database and logs/dumps

Unfortunately LVM was not used when designing the partitioning layout which means that altering the partitions sizes needs downtime of the application. Beyond that I have very different experiences with manually altering conventional partitioning layouts. In that case LVM would give multiple edges:

- more flexible storage distribution (no manual altering of partition tables required, etc.)

- Volumes can be expanded online (no stopping of the VMware services needed)

- clearly easier and "nicer" solution

By default the layout looks like that:

1# df -h|grep -i sd

2/dev/sda3 9.8G 3.8G 5.5G 41% /

3/dev/sda1 128M 21M 101M 18% /boot

4/dev/sdb1 20G 3.4G 16G 18% /storage/core

5/dev/sdb2 20G 16G 3.7G 81% /storage/log

6/dev/sdb3 60G 1.8G 55G 4% /storage/db

Beside a swap partition the first hard drive consists of a boot and root partition. The second hard drive (100 GB) is divided in a big database partition and two smaller partitions for coredumps and logs. The last one is often undersized: it only offers 20 GB.

The missing LVM design can be catched up very quickly.

The following concept needs application downtime for the first implementation but prospective expansions can be done "online". Beyond that my trick is not supported by VMware.

First of all an additional hard drive is added to the VM - I've also chosen 100 GB for that. Basically this size is quite enough (see above) but the default layout isn't always very reasonable. In my case I'm using the vCenter with the little database setup because I only have to manage a little environment - so I don't need the designed 60 GB for database files (/storage/db). If you're managing a bigger environment you might need more storage capacity here. In my opinion you should not or only under reserve resize the partition for coredumps (/storage/core) - if you're having serious issues with the vCenter Server Appliance you might not be able to create complete coredumps anymore.

My idea to optimize the layout looks like that (please keep in mind that you might need to alter my layout depending on your system landscape!):

- partition new hard drive (100 GB) as LVM physical volume

- create new LVM volume group "

vg_storage" - create new LVM logical volumes "

lv_core" (20 GB), "lv_log" (40 GB) and "lv_db" (40 GB) - create

ext3(like before) filesystem on the previously created LVM logical volumes and mount them temporarily - switch into single user-mode and copy data files

- alter

/etc/fstab

By the way: I'm using the VMware vCenter Server Appliance version 5.5 - but probably this procedure can also be adopted on older versions. I highly recommend creating a clone (or at least a snapshot) of the virtual machine before. It can also be a good idea to create a backup of the database and application configuration in accordance with the following KB articles:

- KB 2034505: "Backing up and restoring the vCenter Server Appliance vPostgres database"

- KB 2063260: "Backing up and restoring the vCenter Single Sign-On 5.5 configuration for the vCenter Server Appliance"

- KB 2062682: "Backing up and restoring the vCenter Server Appliance Inventory Service database"

It is important to have a deeper look at my layout and alter it if needed. Ideally you'll have a look at the storage usage of your second hard drive. I'm not responsible for any kind of problems because of insufficient volume sizes.

First of all you'll have to make sure that the system based on SUSE Linux Enterprise Server 11 SP2 is able to detect and activate LVM volumes at boot time - otherwise the next boot will crash with fsck which is not able to check the previously created volumes. For this you need to customize the following configuration file:

1# cp /etc/sysconfig/lvm /etc/sysconfig/lvm.initial

2# vi /etc/sysconfig/lvm

3...

4#LVM_ACTIVATED_ON_DISCOVERED="disable"

5LVM_ACTIVATED_ON_DISCOVERED="enable"

This forces every detected LVM volume to be activated during the system boot before file systems mentioned in /etc/fstab are mounted. If you want to you can limit this behavior to the later created volume group by setting the following parameter:

1#LVM_VGS_ACTIVATED_ON_BOOT=""

2LVM_VGS_ACTIVATED_ON_BOOT="vg_storage"

3...

4LVM_ACTIVATED_ON_DISCOVERED="disable"

This makes sure that only the created LVM volume group (vg_storage) is activated during the boot process - other volume groups are ignored.

On the recently added 100 GB hard drive a LVM partition is created and initialized:

1# fdisk /dev/sdc <<EOF

2n

3p

41

5t

68e

7p

8w

9EOF

After that the LVM volume group and logical volumes are created. I reduced the size of the database volume to 40 GB and doubled the capacity of the log volume:

1# vgcreate --name vg_storage /dev/sdc1

2# lvcreate --size 20G --name lv_core vg_storage

3# lvcreate --size 40G --name lv_log vg_storage

4# lvcreate --extents 100%FREE --name lv_db vg_storage

Afterwards ext3 file systems are created on the new logical volumes:

1# mkfs.ext3 /dev/mapper/vg_storage/lv_core

2# mkfs.ext3 /dev/mapper/vg_storage/lv_log

3# mkfs.ext3 /dev/mapper/vg_storage/lv_db

It is a good idea to update the initial ramdisk to make sure that the kernel is capable of using the lvm2 while booting the system:

1# mkinitrd_setup

2# file /lib/mkinitrd/scripts/setup-lvm2.sh

3# mkinitrd -f lvm2

4Scanning scripts ...

5Resolve dependencies ...

6Install symlinks in /lib/mkinitrd/setup ...

7Install symlinks in /lib/mkinitrd/boot ...

8

9Kernel image: /boot/vmlinuz-3.0.101-0.5-default

10Initrd image: /boot/initrd-3.0.101-0.5-default

11Root device: /dev/sda3 /mounted on / as ext3)

12Resume device: /dev/sda2

13Kernel Modules: hwmon thermal_sys thermal processor fan scsi_mod scsi_transport_spi mptbase mptscsih mptspi libara ata_piix ata_generic vmxnet scsi_dh scsi_dh_rdac scsi_dh_alua scsi_dh_hp_sw scsi_dh_emc mbcache jbd ext3 crc-t10dif sd_mod

14Features: acpi dm block lvm2

1527535 blocks

After that temporary mount point are created and the new file systems are mounted:

1# mkdir -p /new/{core,log,db}

2# mount /dev/mapper/vg_storage-lv_core /new/core

3# mount /dev/mapper/vg_storage-lv_log /new/log

4# mount /dev/mapper/vg_storage-lv_db /new/db

I adopted a VMware KB article (2056764 - "Increase the disk space in vCenter Server Appliance") for copying the data. First you'll have to switch into single user-mode to make sure that no VMware services are running and no file locks are preventing the application to be in a consistent state. Afterwards the files are copied using cp and /etc/fstab is altered (after creating a backup). At last the temporary mount points are deleted and the partitions are mounted:

1# init 1

2# cp -a /storage/db/* /new/db

3# cp -a /storage/log/* /new/log

4# cp -a /storage/core/* /new/core

5# umount /{new,storage}/{db,log,core}

6# cp /etc/fstab /etc/fstab.copy

7# sed -i -e 's#/dev/sdb1#/dev/mapper/vg_storage-lv_core#' /etc/fstab

8# sed -i -e 's#/dev/sdb2#/dev/mapper/vg_storage-lv_log#' /etc/fstab

9# sed -i -e 's#/dev/sdb3#/dev/mapper/vg_storage-lv_db#' /etc/fstab

10# mount /storage/{db,log,core}

11# rmdir -p /new/{db,core,log}

Having a look at the output of df shows that this customization was applied:

1# df -h|grep storage

2/dev/mapper/vg_storage-lv_core 20G 3.4G 16G 18% /stoage/core

3/dev/mapper/vg_storage-lv_log 40G 15G 23G 40% /stoage/log

4/dev/mapper/vg_storage-lv_db 40G 1.7G 36G 5% /stoage/db

Switching into the default runlevel 3 should be no problem:

1# init 3

It is a good idea to try making a reboot to make sure that the new LVM volumes are also available after the next boot. After making sure that VMware vCenter still works like a charm you can delete the formerly data hard drive from the virtual machine configuration.