New VSAN 6.2 home lab

In 2008 I started working as VMware administrator and thus also began using vSphere at home. You might know this - as an IT person you want to have a lab at home that can be compared with the infrastructure at work. 🙂

The hardware half-life is quite short and especially when virtualizing workloads you can reach memory and storage limits very quickly. Until now I had to replace my hardware every two years:

| Years | Server | Hypervisor | CPU | RAM |

|---|---|---|---|---|

| 2008-2010 | HP ProLiant DL380 G3 | ESX 3.5 | 2x Intel Xeon @ 3.2 Ghz | 6 GB |

| 2010-2011 | HP ProLiant DL140 G1 | ESXi 3.5 | 2x Intel Xeon @ 2.4 Ghz | 4 GB |

| 2011-2012 | D.I.Y. server | ESXi 4.1 | Phenom X6 1090T @ 3.2 Ghz | 16 GB |

| 2012 | HP ProLiant MicroServer G7 | ESXi 5.0 | AMD N36L @ 1.3 Ghz | 8 GB |

| 2013-2014 | HP ProLiant MicroServer G7 | ESXi 5.1/5.5 | AMD N40L @ 1.5 Ghz | 16 GB |

| 2014-2016 | D.I.Y. server | ESXi 5.5/6.0 | Intel Xeon E3-1230 @ 3.2 Ghz | 32 GB |

After the last upgrade two years ago I needed new hardware again this year.

Current setup

In my current setup I combined NAS and hypervisor on one single hardware - as a result, this reduced power consumption. In earlier installations, I was also running public DMZ workloads on the same server that also hosts internal VMs. For the combined setup this was no option - as there was the possibility for a remote hacker to gain access to the NAS by hacking the hypervisor through an public VM. I know, this is an abstract possibility - but it might be possible. To make the setup more secure, I decided to move DMZ applications to dedicated Raspberry Pis that are part of a separated VLAN.

The problem of my current setup is that the memory resources are exhausted and the storage throughput is not as good as I would prefer. When designing the setup, I decided to go for a board which can control up to 32 GB memory. For connecting hard drives, I had chosen the HP SmartArray P400 controller which is pretty outdated now.

For VSAN tests I bought two HP ProLiant MicroServer G8 in the meantime and upgraded them to 16 GB memory and SSD storage.

New solution

I think I never edited a blog post as often as this one. Unfortunately I had to change hardware parts a lot in the last 6 weeks.

Since the GA release of vSphere 6, I really wanted to have my hands on VSAN. To implement a supported setup, you will need at least two hosts. Simply adding two SSDs into the server is problematic as the P400 controller officially does not support SSDs - in several bulletin boards users are reporting bad SSD performance.

At a glance, my requirements to the new hardware were:

- 64 GB memory - DDR3 preferred to use my pre-existing modules

- IPMI remote management

- Dual Gigabit Ethernet interfaces for teaming

2-node cluster

Replacing the single server with two nodes looked interesting to me. The advantage is that I finally can use VSAN in a supported way.

In the first way I ordered two ASRock Rack E3C224D4I-14S mainboards. I did not know that ASRock also offers workstation and server mainboards. Until now I only knew the consumer products by the manufacturer from Taiwan. Being optimistic, but still having some doubts I ordered the two mainboards - unfortunately I figured out my doubts were reasonable. One of the mainbords had a DOA (dead on arrival), the second board dies after a reboot a couple of days later. It is really annoying that one of the boards came with an empty BIOS battery. To be honest, I really don't think about buying hardware from ASRock, again - I can hardly recommend buying the product mentioned above.

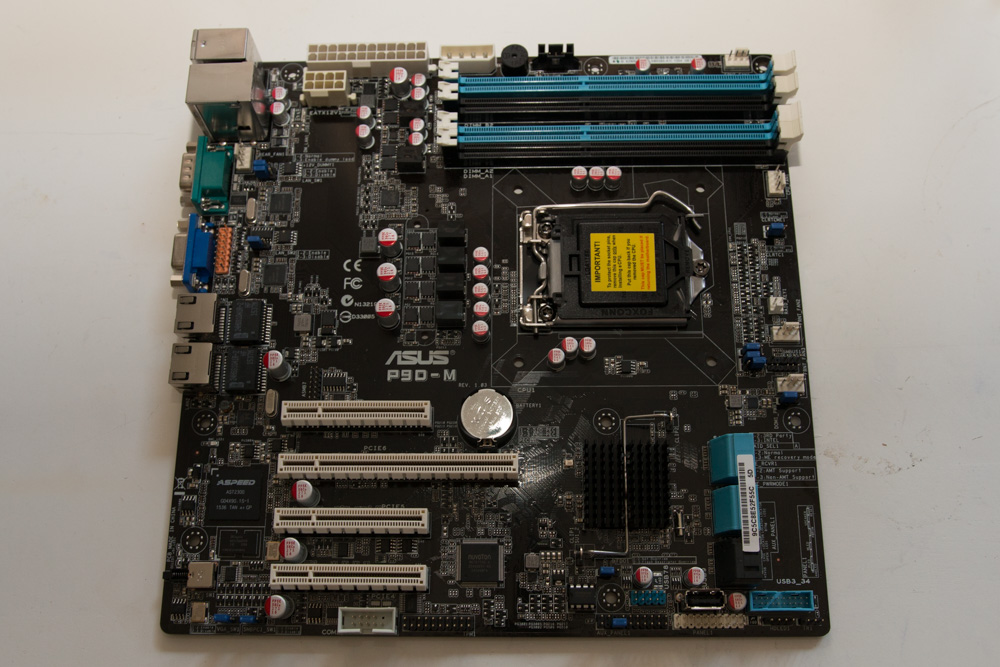

Unfortunately I also had issues with finding the right case in the meantime. The cases were too small, the product description was erroneous. Finally I decided to go for the ASUS P9D-M, which uses the Intel C224 chipset and two Intel i210AT network cards. I decided to go for the older 1150 socket rather than the new variants 1151/1155 to have the possibility to use my pre-existing DDR3 memory. Newer boards also support 32 or even 64 GB of memory - but only along with DDR4 modules and Xeon processors if you plan to use ECC memory. This would have boosted the total costs.

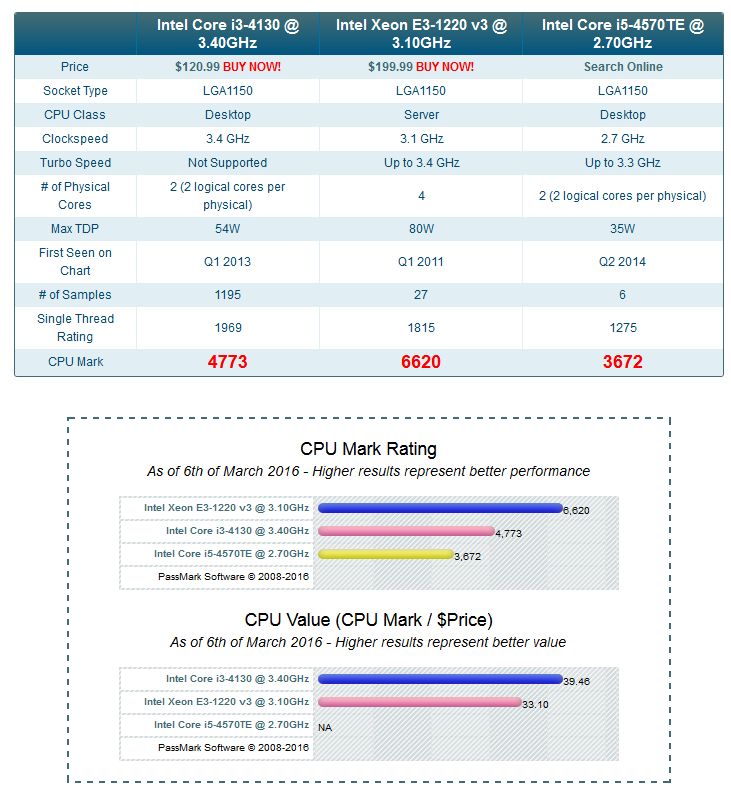

To keep power consumption in a moderate state I was looking for a energy-efficient CPU. To be honest, the Xeon E3-1230 of my previous setup was overkill. The most time I did not charged it at capacity and the TDP of 80 watts - even though using power-saving settings - might have lowered efficiency. When selecting the CPU a low TDP and ECC support were important to me - finally, I had to pick from the following products:

| CPU | Cores | Frequency | TDP | Cache | Release | Price |

|---|---|---|---|---|---|---|

| Xeon E3-1220v3 | 2/4 | 3,1 Ghz | 80 Watt | 8 MB | Q2 13 | 230 € |

| Core i5-4570TE | 2/4 | 2,7 Ghz | 35 Watt | 4 MB | Q2 13 | 200 € |

| Core i3-4360T | 2/4 | 3,2 Ghz | 35 Watt | 4 MB | Q3 14 | 150 € |

| Core i3-4130 | 2/4 | 3,4 Ghz | 54 Watt | 3 MB | Q3 13 | 140 € |

Because I need to buy two CPUs, I diteched the Xeon because of the high price and TDP. I also dropped the i3-4130 because of the small cache of 3 MB. I compared all CPUs on cpubenchmark.net and was suprised when I saw that the i3-4360T beats the i5-4570TE in the benchmarks.

Finally, I decided to go for the i3-4360T because of the low TDP and moderate cache - for at about 150 Euros this really looks like a fair price/performance ratio to me.

On Twitter I stumbled on William Lam's newest Intel NUC VSAN setup. Those single-board computers now combine noticeable CPU power at low comsumption rates and up to 32 GB of memory - so why not go for this? It's simple - they still offer no ECC, no IPMI, no dedicated second network port and they are also quite expensive if you want to have the maximum of possible memory (about 650 Euros per node, without SSD). Another possibility would have been to go for the Xeon D-1500 product family Tschokko told me about. This concept is mostly about embedded hardware utilizing energy-efficient but powerful Xeon processors and up to 128 GB DDR4 memory - awesome! Unfortunately the price is pretty high. The most boards don't have any preloaded memory modules and already cost more than 1000 Euros. With the appropriate memory modules, it is easy to disburse 2000 Euros.

After hours and hours of research, I finally decided to order the following components:

| Part | Product | Price | Link (germany) |

|---|---|---|---|

| Mainboard | ASUS P9D-M | 165 € | [click me!] |

| Processor | Intel i3-4360T | 150 € | [click me!] |

| Memory | 32 GB DDR3 ECC Kingston ValueRAM | - | - |

| Cache SSDs | Samsung EVO 850 250 GB | - | - |

| Capacity SSDs | OCZ Trion 150 480 GB | 99 € | eBay deal |

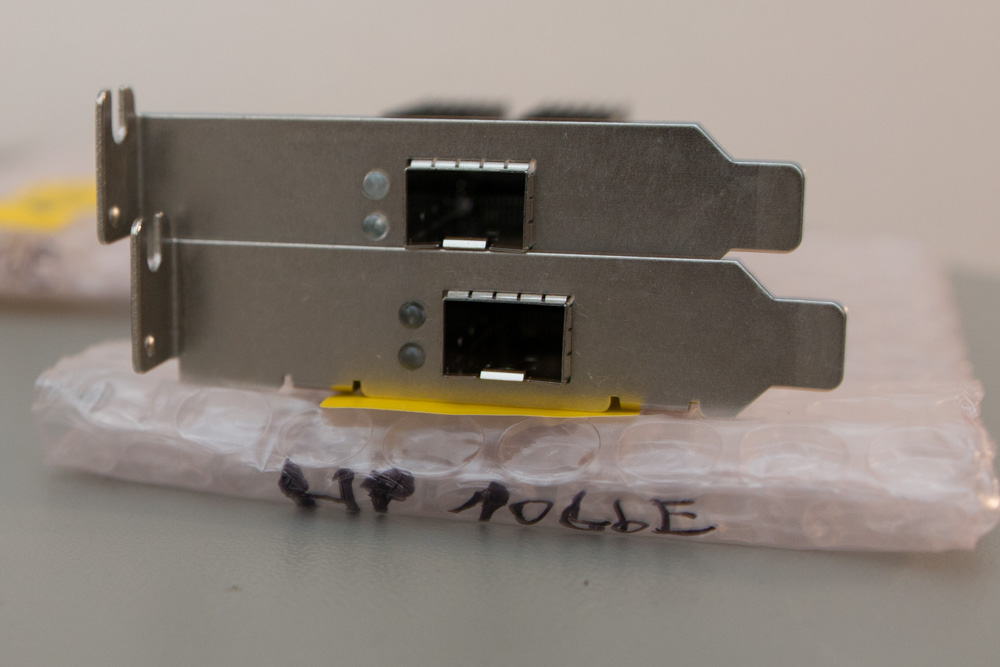

| Network cards | Mellanox Connect-X 2 kit | 60 € | eBay |

| Fan | LC-Power LC-CC-85 | 8 € | [click me!] |

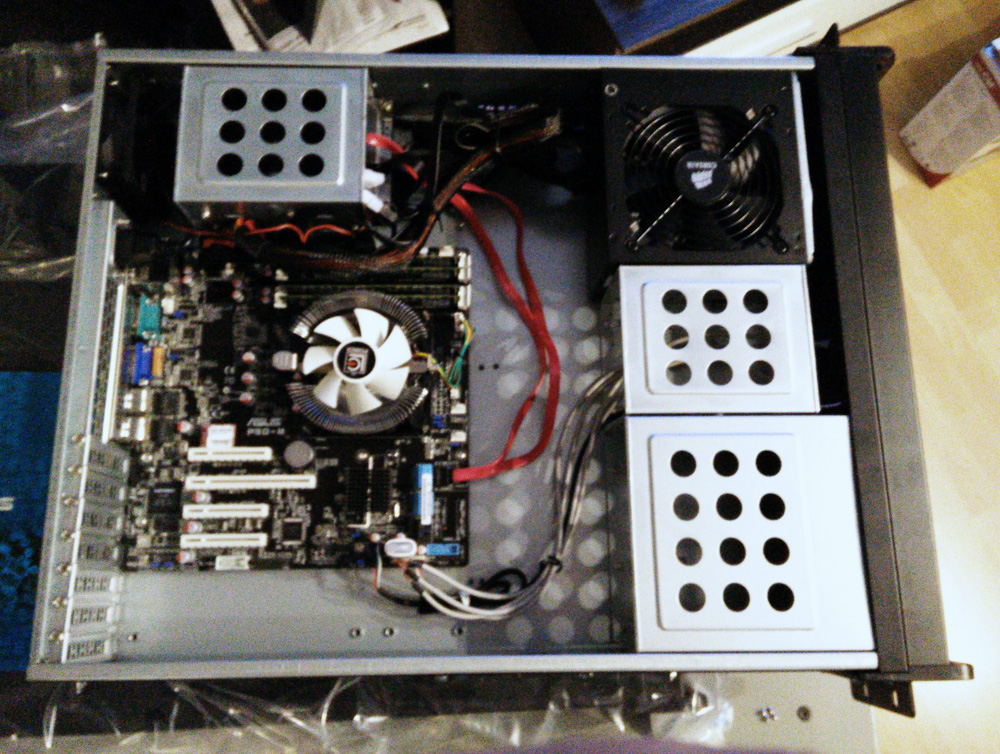

| Case | Inter-Tech IPC 2U-2098 | 75 € | [click me!] |

Because I'm able to use pre-existing SSDs and memory modules, the total costs are at about 1100 Euros. The hard drives of the hybrid setup have been replaces by two additional SSDs that were ordered in a deal. For the cluster node interconnect I bought 10 Gigabit ethernet network cards to have enough bandwidth for VSAN.

So, after selling the obsolete hardware parts it's quite a manageable upgrade. But I'm quite sure that the 64 GB memory will be exhausted in a year again.. 🙂

What's about the NAS and the DMZ?

Well, running everything on one single server might be more energy-efficient, but also decreases flexibility - which also makes finding new hardware harder. In my new concept I'm moving my NAS back to one of the remaining Microservers. This also made the selection of the new CPU easier. Previously, I passed a dedicated SAS controller to the NAS VM to access the particular hard disks - this functionality is called VMDirectPath I/O and requires a special CPU features named VT-d (Intel Virtualization Technology for Directed I/O). Normally, this feature is only available on Xeon processors. I also migrated the DMZ workloads back to virtual machines obsoleting the Raspberry Pis.

Im comparison with the former setup three servers instead of one are running permanently. Strictly speaking, the previous setup also required three hosts to run permanently - the Raspberry Pis were implementing my DMZ workloads - but, of course, embedded hardware has a much lower power consumption than conventional servers.. 🙂

I configured the particular servers in a way to save energy (ACPI C, P states, hard disk standby).

Photos

Some photos of the setup: